Abstract

Intro Video

Method Overview

Our method consisits of offline learning and online adaptation. First we collect datasets which consist of camera images and projected map images. Then we feed the data to the value function network and perform offline MC learning, where the camera image and map projection image are sent to the encoders in parallel and then aggregated together to obtain the state value function. During the online deployment, we perform one additional TD adaptation step and get the refined value function.

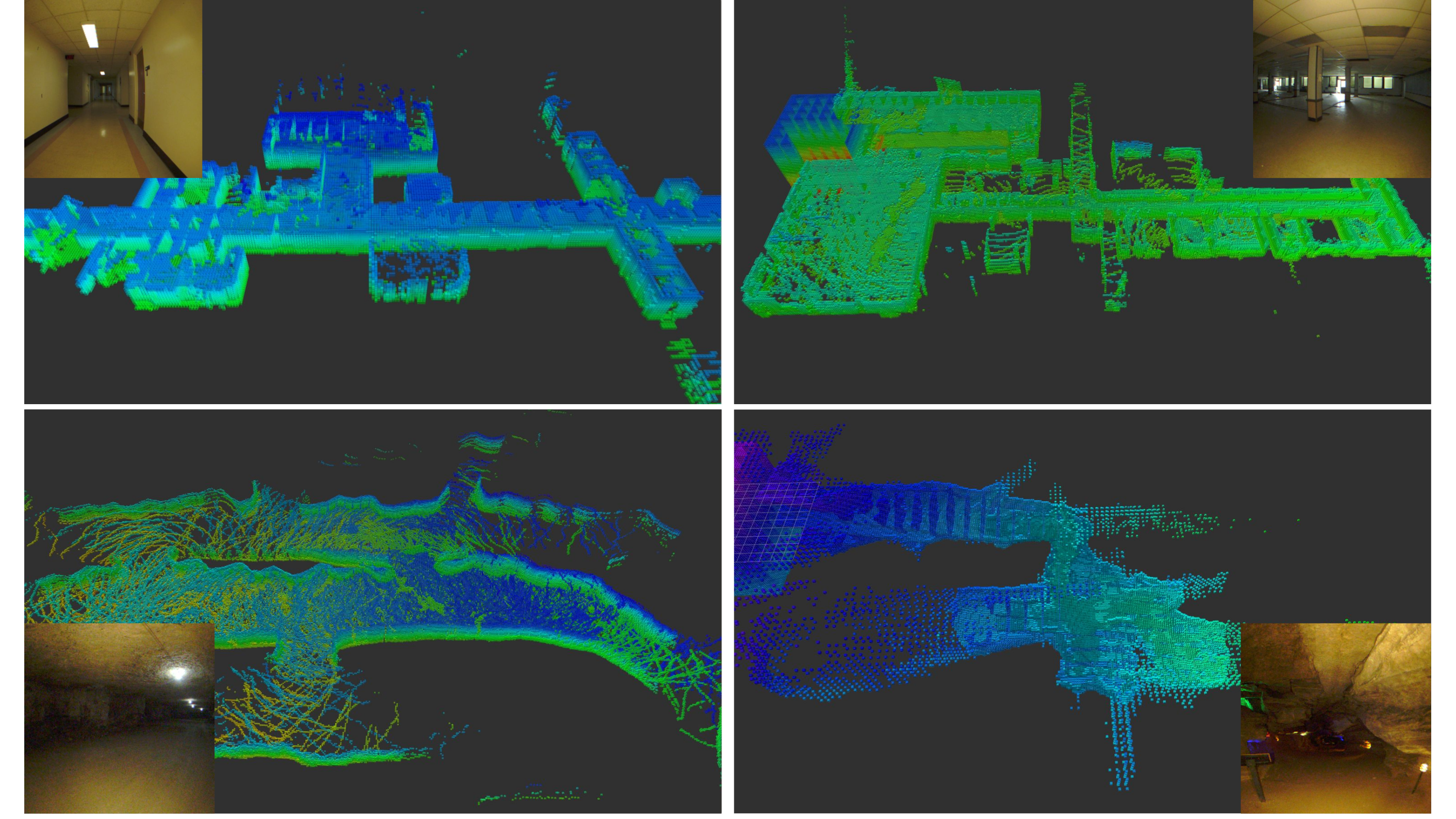

Datasets Collection Environments

Snapshots of the data collection environments. Here we show the 3D reconstructed occupancy grid map as well as the captured images captured (At the corner of each subfigure) during exploration. From left to right and top to bottom: Auditorium corridor, Large open room, Limestone mine and Natural cave.

Experimental Results

Regret Analysis in Corridor Environment

With the learned value function, robot could make better decisions.

In Cave Environment

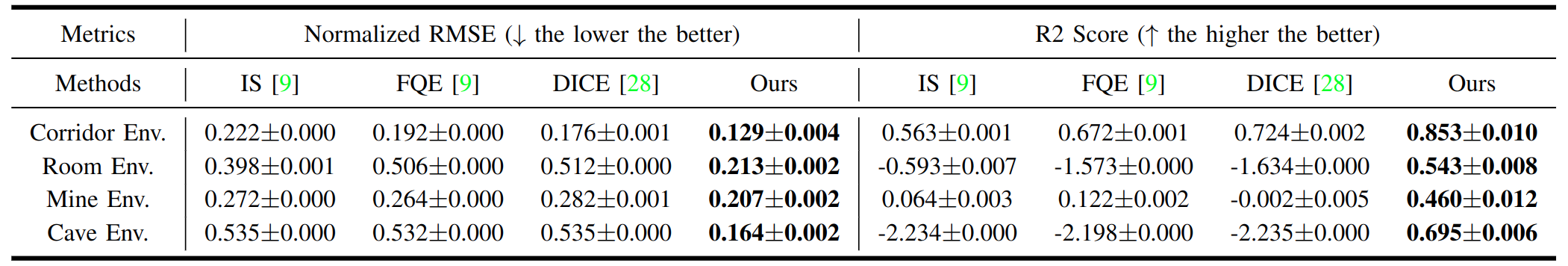

Quantitative Results

We compare our approach with other SOTA OPE methods.

Real Robot Experiments

Robot Explores with Learned Value Function

Bag files replay

Exploration Behaviors Compared with Frontier-based Method

Ours with Learned Value

Frontier-based Method

With learned value function, our method is able to explore high value regions which frontier-based method fails.

BibTeX

@article{2022opere,

author = {Yafei Hu and Junyi Geng and Chen Wang and John Keller and Sebastian Scherer},

title = {Off-Policy Evaluation with Online Adaptation for Robot Exploration in Challenging Environments},

booktitle = {IEEE Robotics and Automation Letters (RA-L) and IROS},

year = {2023},

}